Delhi: Technologies based on Artificial Intelligence (AI) and Machine Learning (ML) have seen dramatic increases in capability, accessibility and widespread deployment in recent years and their growth shows no sign of abating. While the most visible AI technology is marketed, learning-based methods are employed behind the scenes much more widely. From route-finding on digital maps to language translation, biometric identification to political campaigning, and industrial process management to food supply logistics, banking & Finance to healthcare, AI saturates the present-day connected world in every aspect.

Col. Inderjeet explains how Deepfakes is the most serious AI Crime threats. In addition, with the adaption of AI for all the productive use cases, there are AI-enabled cybercrimes such as identity frauds – based on deepfakes or synthetic images and deepfake videos– which is already growing.

Deepfakes is the fastest-growing tactics being used by fraudsters. Fraudsters are now turning to synthetic identities to open new accounts. Identities of any person can be completely faked or a unique amalgamation of false information, which may have been stolen or modified. Personal Identifying Information (PII) that may be hacked from a database (phished from an unsuspecting person) or bought from the dark web. Because of the limited impact on those whose PII has been compromised or stolen, often this kind of frauds will go unnoticed for longer than traditional identity frauds.

Also Read: Deepfakes: The dark side of Artificial Intelligence

Deepfakes are the result of using artificial intelligence to digitally recreate an individual’s appearance with great accuracy, enabling someone to literally make it look like someone is saying something that they never said, or appeared someplace that they have never been. YouTube is rife with examples of varying quality, but it is easy to see how a well-made deepfake could be damning to someone who is targeted maliciously.

Deepfakes have been ranked as one of the most serious Artificial Intelligence (AI) crime threats based on the wide array of applications it can be used for criminal activities and terrorism.

When the term was first coined, the idea of deepfakes triggered widespread concern mostly centered around the misuse of this technology in spreading misinformation, especially in politics. Another concern that emerged revolved around bad actors using deepfakes for extortion, blackmail, and fraud for financial gain.

The rise of deepfakes and synthetic AI-enabled technology makes it easier for fraudsters to generate very realistic-looking images or videos of people for these synthetic identities to commit serious levels of frauds. There are plenty of Mobile Apps that allow anyone to convincingly replace faces of celebrities with their own, even in videos and turning into viral social media content.

Fake audio or video content has been ranked by experts as the most worrisome use of Artificial Intelligence in terms of its potential applications for cybercrime or cyber terrorism, according to a Researchers from University College London have who has released a ranking of what experts believe to be the most serious AI crime threats.

One of the studies, published in Crime Science and funded by the Dawes Centre for Future Crime at UCL (and available as a policy briefing), identified 20 ways AI could be used to facilitate crime over the next 15 years. These were ranked in order of concern—based on the harm they could cause, their potential for criminal profit or gain, how easy they would be to carry out, and how difficult they would be to stop.

Also Read: Dealing with Deepfakes and FakeNews

Most worried are the audio/video impersonation, followed by tailored phishing campaigns and driverless vehicles being used as weapons. Fake content would be difficult to detect and stop and that it could have a variety of aims - from discrediting a public figure to extracting funds by impersonating a couple's son or daughter in a video call. Such content may lead to a widespread distrust of audio and visual evidence, which itself would be societal harm.

Col. Inderjeet further explains, "Aside from the generation of fake content, five other AI-enabled crimes were judged to be of very high concern. These are - using driverless vehicles as weapons, Creating tailored spear-phishing attacks, disrupting AI-controlled systems, harvesting online information for the purposes of large-scale blackmail, and AI-authored fake news. While some of the least worrying threats are - threats include forgery, AI-authored fake reviews, and AI-assisted stalking."

Unlike many traditional crimes, crimes in the digital realm can be easily shared, repeated, and even sold, allowing criminal techniques to be marketed and for crime to be provided as a service. This means criminals may be able to outsource the more challenging aspects of their AI-based crime. These crimes can be classified as low, medium, or high threats.

Low Threats: Low threats give few benefits for the criminals, as they would cause little harm and bring small profits, usually without being very achievable and being relatively simple to defeat. In ascending order, these threats included forgery, then AI-assisted stalking and certain forms of AI-authored fake news, and finally bias exploitation (or malicious use of platform algorithms), burglar bots (small remote drones with enough AI to assist with a break-in by stealing keys or opening doors), and avoiding detection by AI systems.

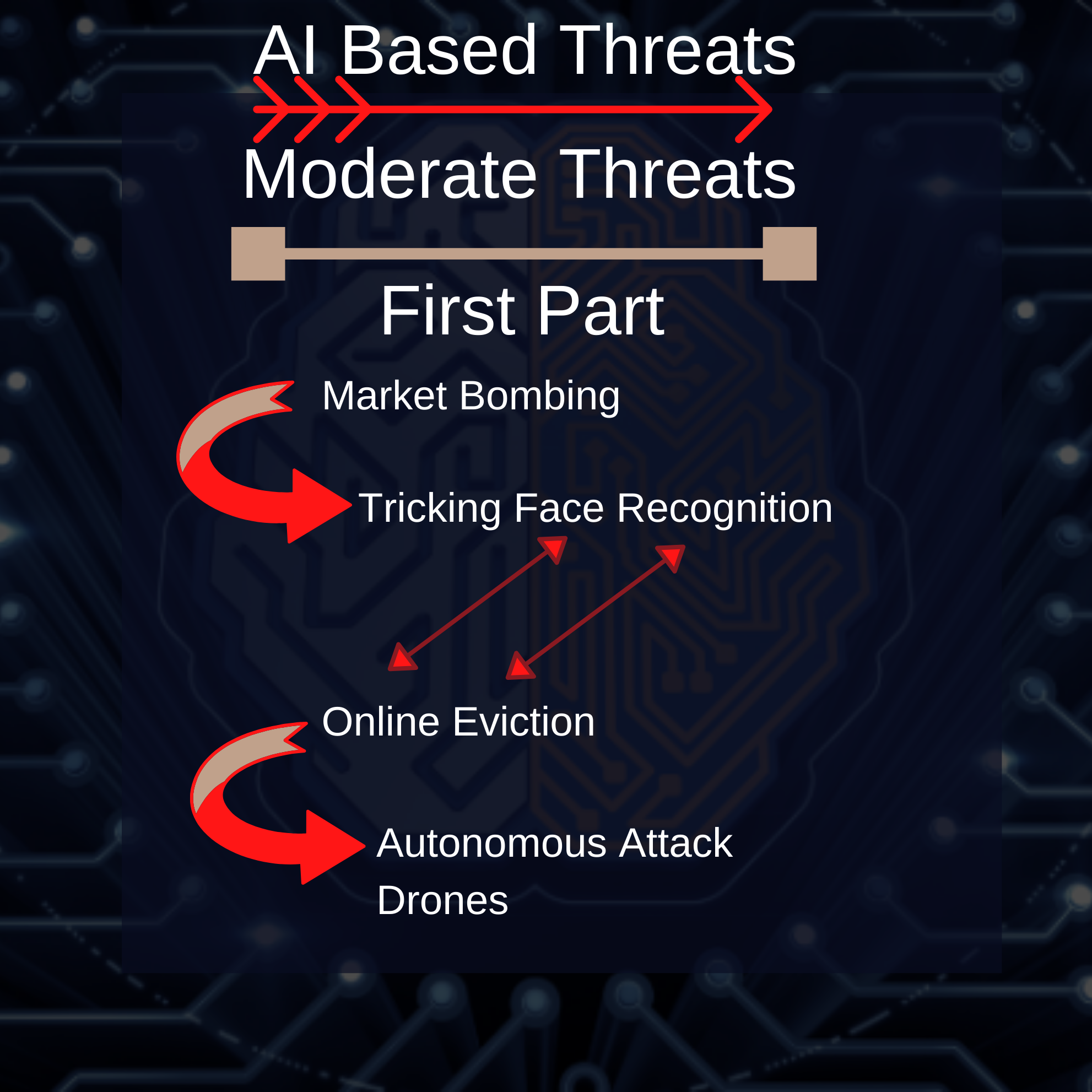

Moderate Threats: These threats turned out to be generally more neutral, with the four considerations averaging out to be neither good nor bad for the criminal, with a few outliers that still balanced out. These eight threats were divided into two parts of severity. The first part contained

- Market Bombing (where financial markets are manipulated by trade patterns)

- Tricking Face Recognition

- Online Eviction (or blocking someone from access to essential online services)

- Autonomous Attack Drones for smuggling and transport disruptions.

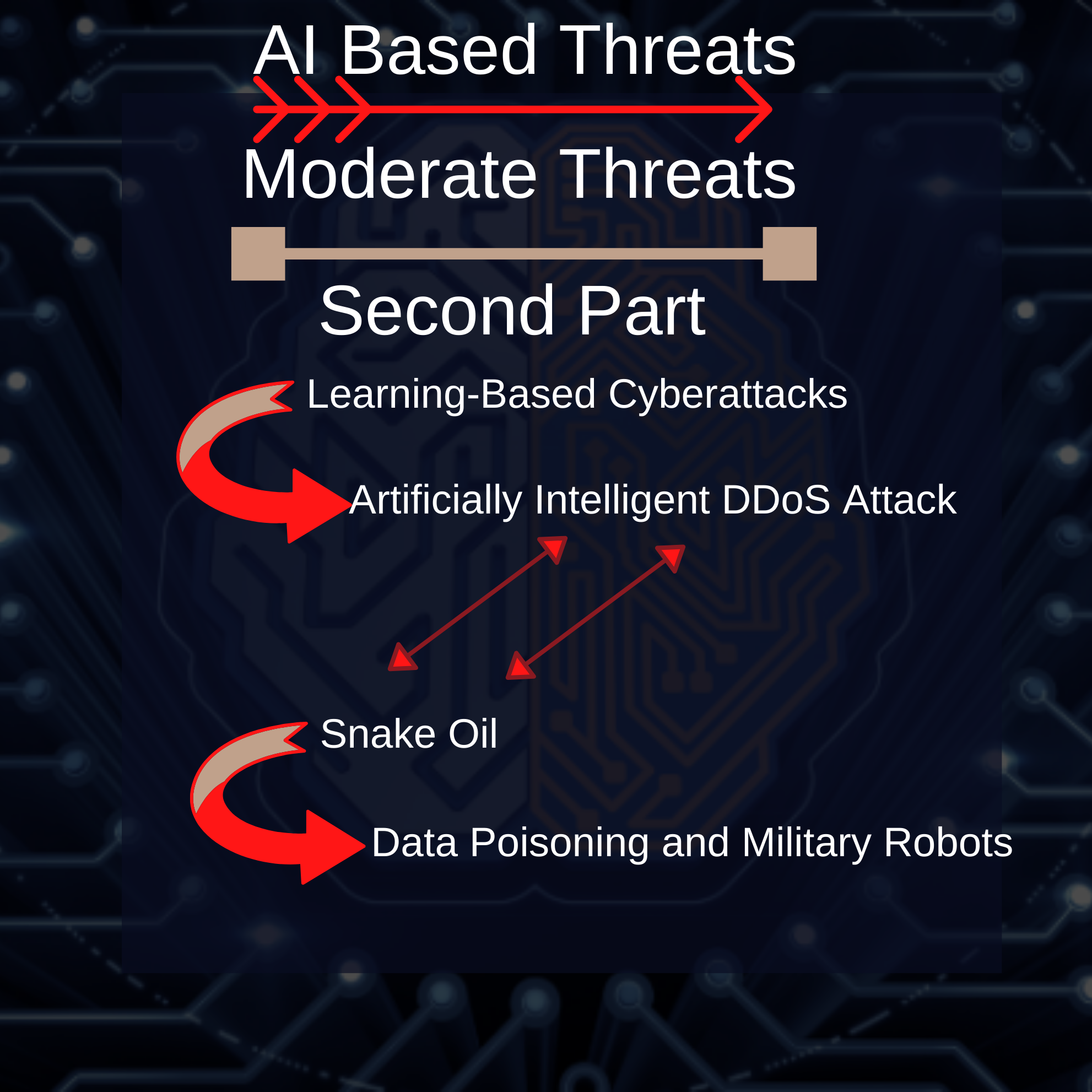

The second part in the moderate range included

- Learning-Based Cyberattacks

- Artificially Intelligent DDoS Attack

- Snake Oil, where fake AI is sold as a part of a misrepresented service

- Data Poisoning and Military Robots

As the injection of false data into a machine-learning program and the takeover of autonomous battlefield tools could both cause some severe concerns.

High Threats: Finally, there were plenty of threats that were ranked as very concerning by the teams of experts. Crimes like

- Disrupting AI-Controlled Systems

- inflammatory AI-Authored Fake News

- Wide-Scale Blackmail

- Tailored Phishing (or what we usually describe as spear phishing)

- Use of Autonomous Vehicles as Weapons ranked just above that

The threat that ranked as most beneficial to the criminal across all four considerations was the use of Audio/Visual Impersonation, more commonly referred to as deepfakes.

Col. Inderjeet adds, "Of course, just because of some threats—like deepfakes—are so much more impactful than others, doesn’t mean that you can ignore these other threats. In fact, the opposite is true. While having someone literally put words in your mouth is obviously harmful, it could also be extremely harmful to have an assortment of negative reviews shared online, whether they were generated by AI or not."

In an increasingly online world, business opportunities are largely migrating to the Internet. As a result, you need to ensure that your business is protected against online threats of all kinds—again, regardless of whether AI is involved.

You can follow Col. Inderjeet on twitter @inderbarara, insta:inderbarara

Also Read: Coronavirus Pandemic replacing Finger Biometrics with Voice and Facial Biometrics