New Delhi: The social network Facebook that the new rule applies to false or misleading content about COVID-19 and vaccines, climate change, elections, or other topics so that fewer people see misinformation on its family apps.

-

Taking Action Against People Who Repeatedly Share Misinformation https://t.co/GagjE0v7IA

— Facebook Newsroom (@fbnewsroom) May 26, 2021 " class="align-text-top noRightClick twitterSection" data="

">Taking Action Against People Who Repeatedly Share Misinformation https://t.co/GagjE0v7IA

— Facebook Newsroom (@fbnewsroom) May 26, 2021Taking Action Against People Who Repeatedly Share Misinformation https://t.co/GagjE0v7IA

— Facebook Newsroom (@fbnewsroom) May 26, 2021

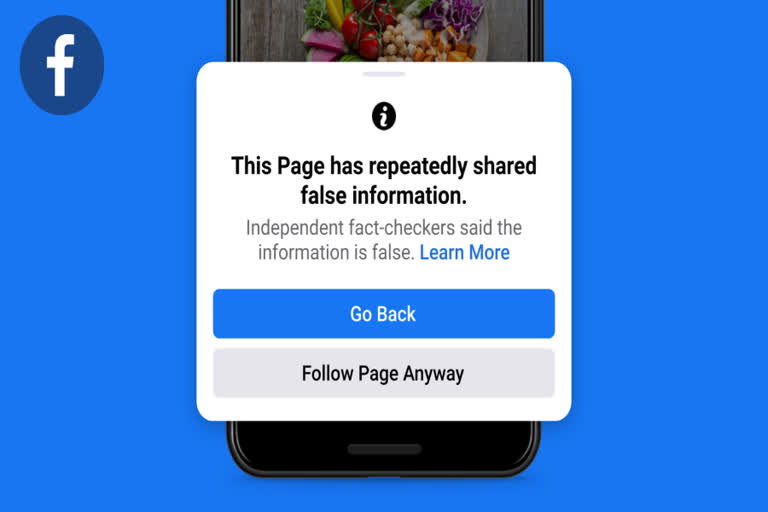

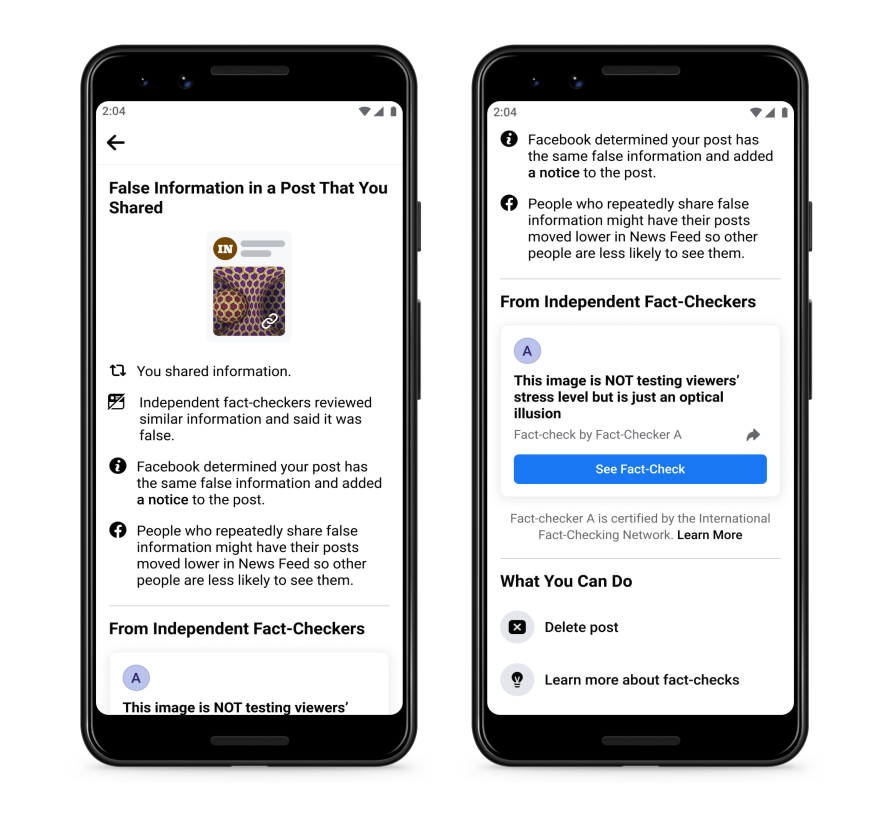

"Starting today, we will reduce the distribution of all posts in News Feed from an individual's Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners. We already reduce a single post's reach in News Feed if it has been debunked," Facebook informed.

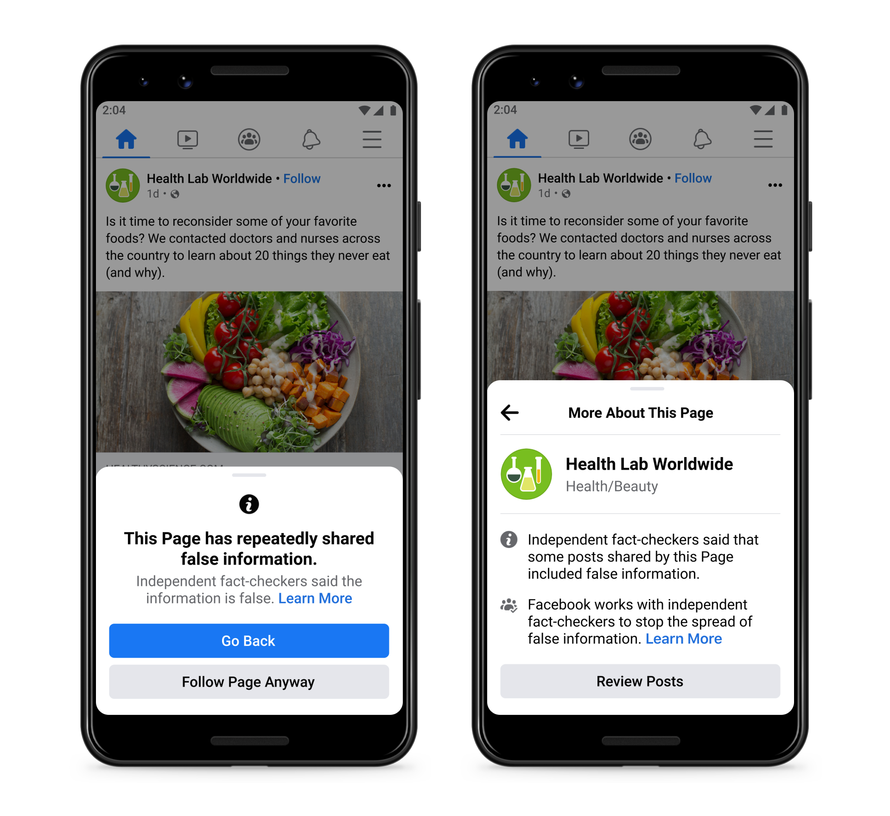

The company currently notifies people when they share content that a fact-checker later rates.

Now, Facebook has redesigned these notifications to make it easier to understand when this happens. The notification includes the fact-checkers article debunking the claim as well as a prompt to share the article with their followers.

"It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them," the social network said.

The company launched its fact-checking program in late 2016.

"We've taken stronger action against Pages, Groups, Instagram accounts and domains sharing misinformation and now, we're expanding some of these efforts to include penalties for individual Facebook accounts too," the company noted.

Also Read: Epic Games gives early access to 3D tool Unreal Engine 5

(Inputs from IANS)