Delhi: Cloud-based messaging platform Telegram is a hotbed for Pornographic Deepfakes, according to a security investigation carried out by Sensity, a visual threat intelligence company based in the Netherlands, there is a “Deepfake ecosystem” that exists on the messaging app Telegram.

Col Inderjeet said, " It appears that a large network of people on the app has been using the technology to create nonconsensual fake images. The focal point of this end-to-end encrypted messenger as a "Deepfake ecosystem is an AI-powered bot that allows users to photo-realistically “strip naked” clothed images of women."

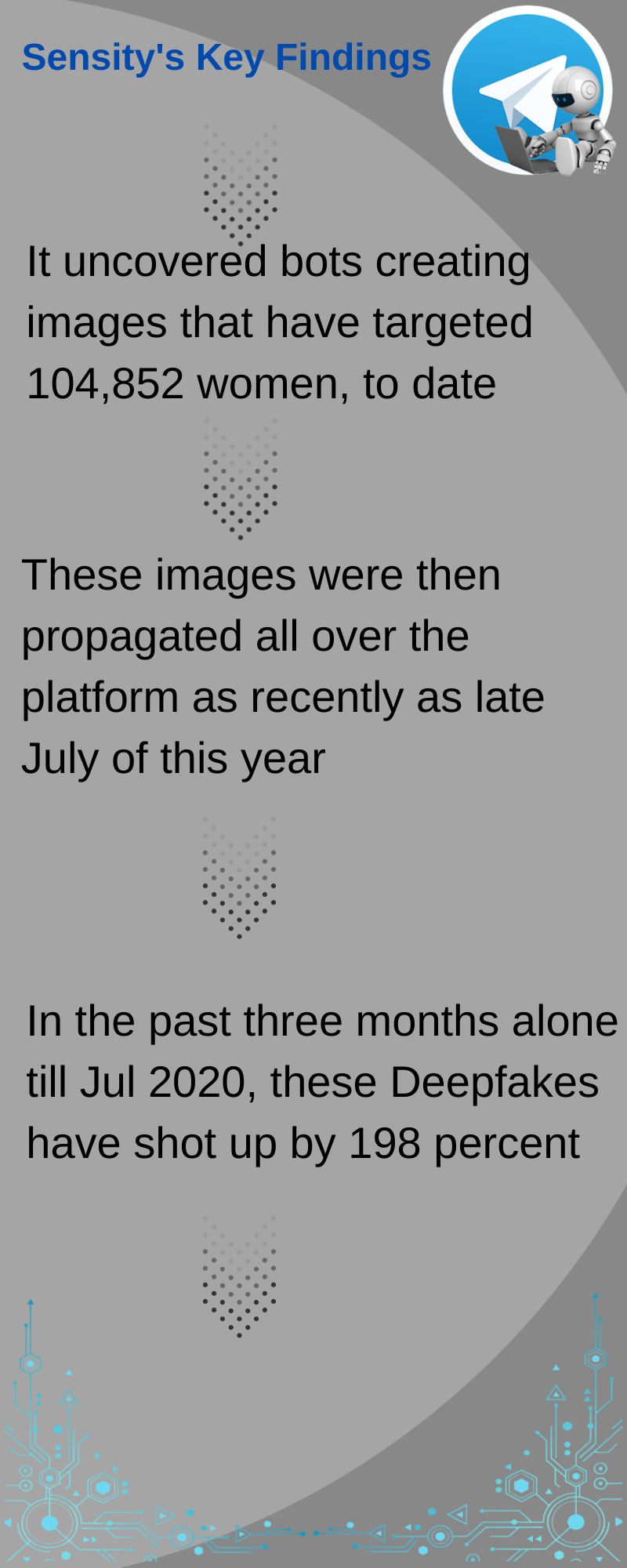

Over 680,000 women have no idea their photos were uploaded to a bot on the messaging app Telegram to produce photo-realistic simulated nude images without their knowledge or consent. In these photos (modified by a bot based on artificial intelligence), the women who have been victims of this technology appear naked and these photos are being shared on Telegram channels.

Also Read: Deepfakes: The dark side of Artificial Intelligence

The bot is free to use by simply joining a private messaging channel on Telegram. To “strip” an image, all that users are required to do is upload a photo of a person and the bot will send an image back of her appearing naked. All of the images so far have been that of females. The transformation costs nothing, although users can pay to upload multiple images that will be returned without a watermark.

It is not the first time that we talk about Deepfakes and the use of this technology to "undress" women. Last year in 2019, ' DeepNude ' App made many headlines, but its creator decided to close the app after all the controversy that had been generated around it. The program used to create the images became known as 'Deepnude', using a variety of the Deepfake technique dedicated to creating pornographic images. Its creator removed the tool from the internet shortly after its publication, in June last year, for fear of misuse by Internet users. However, advanced users were able to reverse engineer the software and adapt the code for the robots in Telegram - which can also automatically manage payments among other platforms.

Also Read: Dealing with Deepfakes and FakeNews

DeepNude uses an artificial intelligence technique called Adversary Generative Networks (GANs), with which variable results are obtained, some clearly showing that the images are fake with visible pixels, but sometimes convincing enough to make believe that they are real photos. DeepNude is easier to use and more easily accessible than Deepfakes has ever been. Whereas Deepfakes require a lot of technical expertise, huge datasets, and access to expensive graphics cards,

About 104,852 images of women have been posted publicly to the app, with 70% of the photos coming from social media or private sources. According to the survey conducted by Sensity among users of the bot, 63% of Deepfakes are generated from the faces of women known personally to users. They use images from a social network or personal photos to generate naked avatars. 16% of users prefer to generate Deepfakes from celebrity faces, 8% from Instagram models or influencers, and 7% from random women. Sensity also notes that a - limited - number of Deepfakes involve minors.

- Unlike the algorithms that make Deepfake videos — including nonconsensual sexual videos — the Telegram bot doesn’t need thousands of images to work. It only needs one image to create a Deepfake.

- These bots are powered by artificial intelligence, as per Sensity, and carry out actions of "stripping" a photo without clothes, often for public shaming and extortion.

- The firm describes this process as a kind of editing feature which can be easily used by smartphone and traditional computer owners. All they need to do is to retrieve a photo from their personal images gallery and allow the bots to generate the Deepfake. These photos can be from anywhere and everywhere on the internet.

- There is a cause for concern, of course, when you consider the threat of people being bullied or blackmailed, although the administrator of the channel has said that the images aren’t realistic enough for anything pernicious to happen.

Since Deepfakes started emerging in late 2017, the media and politicians focused on the dangers they pose as a disinformation tool. But the most devastating use of Deepfakes has always been in how they're used against women: whether to experiment with the technology using images without women's consent or maliciously spreading nonconsensual porn on the internet. DeepNude is an evolution of that technology that is easier to use and faster to create than Deepfakes. DeepNude also dispenses with the idea that this technology can be used for anything other than claiming ownership over women’s bodies.

Also Read: AI Based Threats explained by Col. Inderjeet Singh, DG, CSAI

Col. Inderjeet further adds, "Deepfakes have become a widespread, international phenomenon, but platform moderation and legislation so far have failed to keep up with this fast-moving technology. In the meantime, women are victimized by Deepfakes and left behind for a more political, US-centric political narrative. Though Deepfakes have been weaponized most often against unconsenting women, most headlines and political fear of them have focused on their fake news potential."

Let's be clear, unfortunately, this kind of abuse will not be preventable as the use of this technology is becoming more accessible to everyone, something that could lead us to some worrying scenarios. What we expect is the inevitable drift that will see Deepfakes technology used for purposes of custom pornography, revenge porn, blackmail, reputation attacks, etc. Sensity's report also states that 70% of victims are private citizens, while only the remaining 30% are celebrities.

A major concern that there could be cases in which some people can use this material to extort money or threaten those who appear in the image or to publicly humiliate the victim (the famous revenge-porn ).

You can follow Col. Inderjeet on Twitter @inderbarara, insta:inderbarara

Also Read: Know all about Ransomware