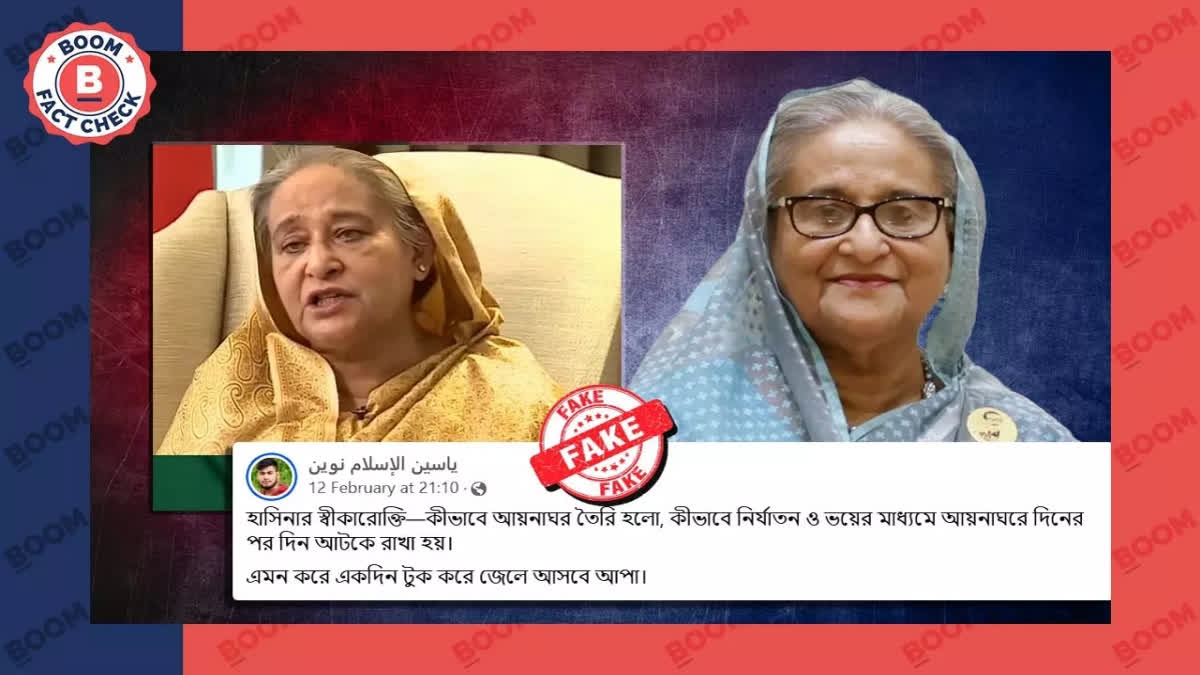

CLAIMSheikh Hasina has admitted to having create detention centers called 'aynaghor' in Bangladesh. FACT CHECKVideo of Sheikh Hasina admitting to creating 'aynaghor' is a deepfake, made by manipulating a 2019 interview she gave to BBC News Bangla. |

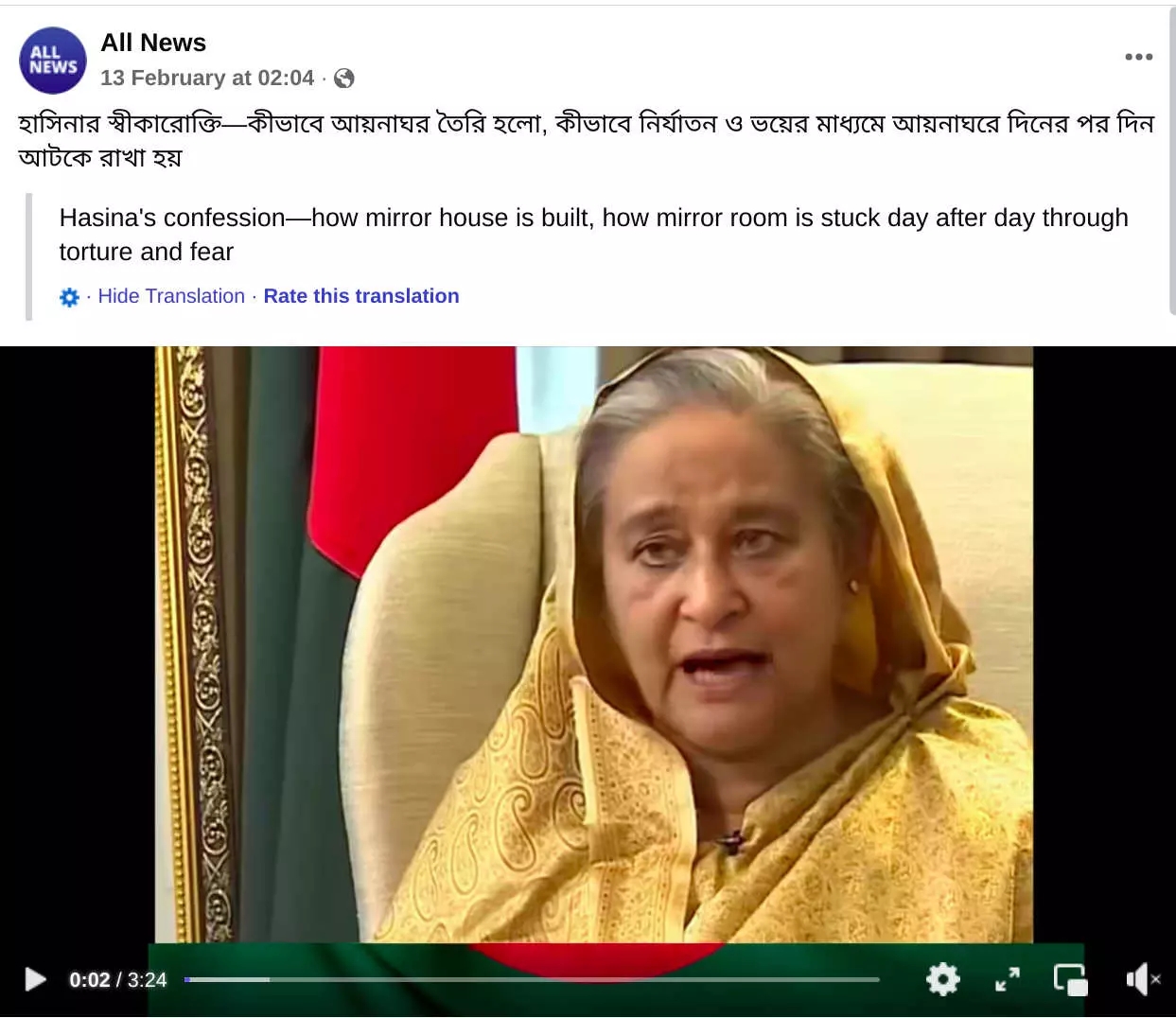

A video is being shared widely on Facebook, purportedly showing ousted Bangladeshi Prime Minister Sheikh Hasina admitting to have created "aynaghor" or "house of mirrors" - a colloquial term for clandestine detention centers.

BOOM found this claim to be false; our investigation provides strong evidence of the viral video being edited using artificial intelligence, by manipulating a 5-year-old interview of Hasina. Our partners at the Deepfakes Analysis Unit also confirmed to us through expert consultation that the viral video is AI-manipulated, created by overlaying a voice clone, and adding a lip-sync to match her lip movements.

Hasina's regime was marred with allegations of forced disappearances and incarcerations of dissidents at clandestine detention centers, where they were allegedly tortured. These detention centers were colloquially called "aynaghor" in Bangla, which translates to 'house of mirrors' in English.

In the viral video, Sheikh Hasina can be heard saying, "Tarek Siddiqui gave the idea for the 'Aynaghor' (House of Mirror). The 'Aynaghor' was planned experimentally as there was a lot of publicity and criticism due to the crossfire. A three-foot by three-foot house was built and opposition party leaders and journalists were first brought in." She can be heard making other comments on the "aynaghor" in the video.

The video was widely shared with a Bangla caption, translates to English as, "Hasina's confession—how House of Mirror is built, how (people) were kept in House of Mirror day after day through torture and fear."

Verdict: Deepfake Made Using Old Interview Footage

BOOM analysed the video and found several jump cuts throughout, indicating presence of manipulation. We ran a few keyframes of the video through a reverse image search on Google, which led us to a 2019 interview of Hasina by BBC News Bangla.

We then compared keyframes from the YouTube video of the BBC interview with those in the viral video and observed that Hasina's clothing and the background were the same - leading us to conclude that the BBC interview was the original clip of the interview.

In the original 22-minute BBC interview, Hasina does not mention 'aynaghor' or the 'house of mirrors'.

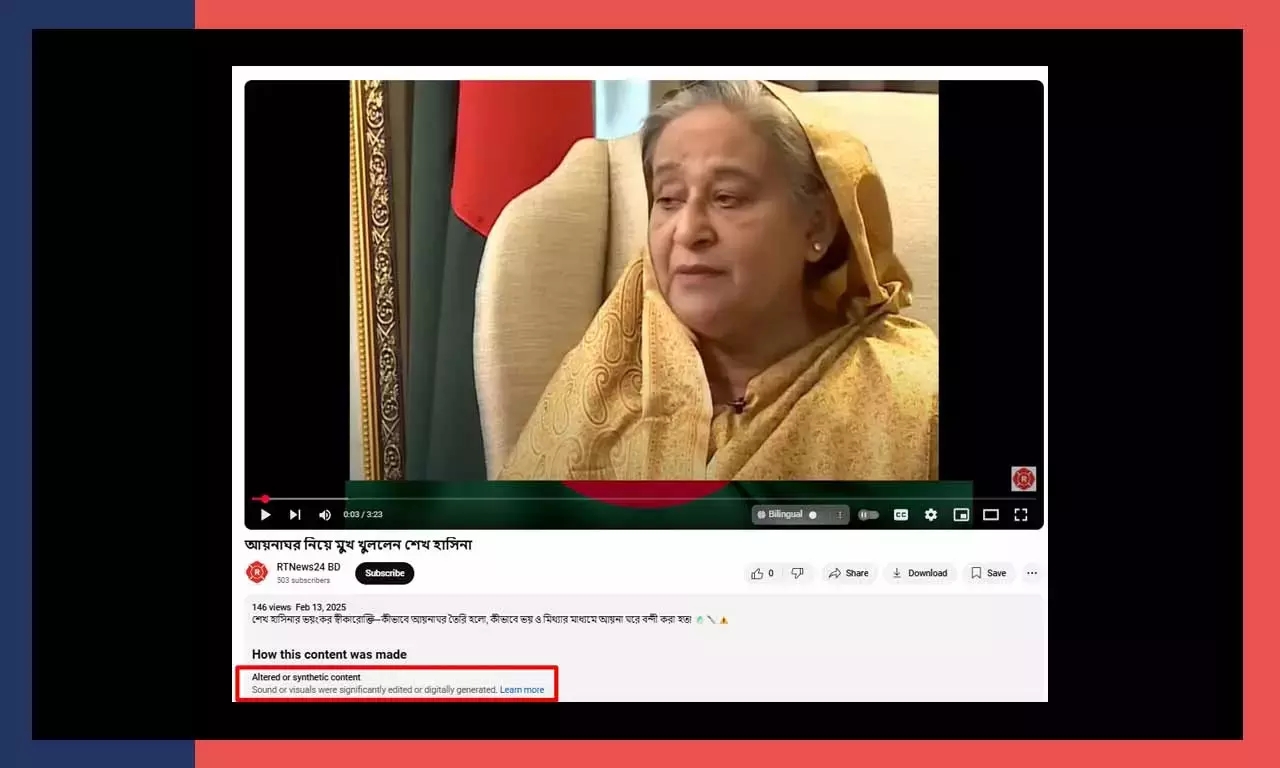

Additionally, the search on the video keyframe led us to a video uploaded on YouTube on February 13, 2025. This video carried a label of 'Altered or Synthetic Media', added by YouTube. YouTube adds this disclaimer/notice on content created or manipulted using Artificial Intelligence

This indicated to us that the viral video has been altered, and that it has likely been manipulated through artificial intelligence.

BOOM also consulted our partners at the Deepfakes Analysis Unit, who analysed the video using detection tools and spoke to experts at Contrails AI. Both concluded that the audio and video were manipulated using AI.

The audio analysis predicted one per cent chance of it being real, showing a very high likelihood of it being a voice clone.

The video analysis predicted 31 per cent chance of the video being real, showing a moderately high likelihood of it being altered using AI. The experts at Contrails AI also pointed out to DAU that "there are clear visible manipulation artifacts near the lips are also shaking unnaturally when the audio is silent."

(This story was first published by BOOM and is being republished by ETV Bharat as part of Shakti Collective.)